2021. 10. 1. 00:59ㆍai/Deep Learning

Image DataGeneration 사용방법

(CSV보다 느리나 memorie면에서 효율적이다)

→CSV형식으로 image를 변환시키지 않고 어떻게 하면 학습할 수 있을까요?

→Image Data Generator(발전기)

를 폴더(directory)라 DataFrame으로부터 data를 가져올수 있는 Generator

→flow_from_directory(함수) 를 이용해 폴더로 부터 이미지를 불러오는데 labeling을 자동으로 할 수 있어요!!

Image Data Generaor는 폴더로부터 땡기는거라 폴더를 제대로 만들지 않으면 사용하기 힘들다

google drive는 스토리지를 이용하면 되나 비용이 발생해 local에서 사용

local pc

1. Jupyter Home (python_ml)

2. data (directory)

(0)cat_dog_full (25000 = Cat 12500/ Dog 12500)

(1)train

(1)cats (7000)

(2)dogs (7000)

(2)validation

(1)cats (3000)

(2)dogs (3000)

(3)test

(1)cats (2500)

(2)dogs (2500)

(0)cat_dog_small (4000)

(1)train

(1)cats (1000)

(2)dogs (500)

(2)validation

(1)cats (1000)

(2)dogs (500)

(3)test

(1)cats (500)

(2)dogs (500)

위 작업을 수동으로하나요? code로 처리

3. cat_dog (directory) - train (csv)

[2,500개의 image = (dogs 12500) (cats 12500)]

code

# 적절한 형태로 폴더를 생성한 후 이미지를 나눠어 저장했어요!

# ImageDataGenerator를 이용해 보아요!

import os

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import matplotlib.pyplot as plt

# 이미지 폴더별 변수 나누기

train_dir = 'C:/python_ML/data/cat_dog_full/train'

valid_dir = 'C:/python_ML/data/cat_dog_full/validation'

# ImageDataGenerator를 생성해 보아요!

# 특정 폴더에서 이미지를 가져오는 역할을 해요!

# 이미지를 가져올 때 scaling해 줄 수 있어요!

train_datagen = ImageDataGenerator(rescale=1/255) #255/1 은 색상값을 빼서 나누면

valid_datagen = ImageDataGenerator(rescale=1/255) #MinMaxScaling하는 효과를 얻을 수 있어요

ImageDataGenerator()는 data를 발생

flow_from_directory()는 어디서 발생하는지

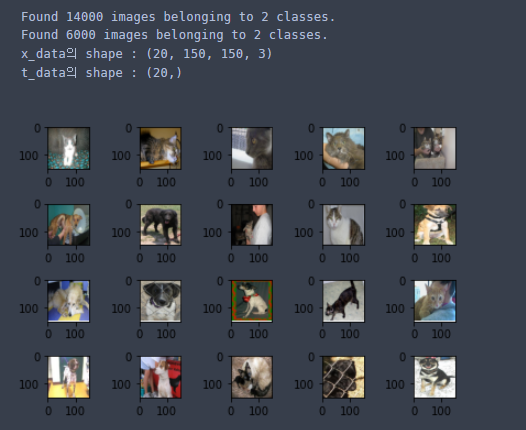

train_generator = train_datagen.flow_from_directory(

train_dir, # target directory

classes=['cats', 'dogs'], # cats, dogs directory 순서로 label(0, 1)을 설정

# 만약 classes 설정을 생략하면 폴더의 순서(오름차순)로 label을 결정

target_size=(150,150), # image resize

batch_size=20, # 한번의 20개의 이미지를 가져와요!

# label에 상관없이 가져와요!

class_mode='binary' # 이진분류를 위한 값 : binary

# 다중분류를 위한 값 : categorical

)

valid_generator = valid_datagen.flow_from_directory(

valid_dir,

classes=['cats', 'dogs'],

target_size=(150,150),

batch_size=20,

class_mode='binary')

# generator를 이용한 반복처리

for x_data, t_data in train_generator:

print('x_data의 shape : {}'.format(x_data.shape))

print('t_data의 shape : {}'.format(t_data.shape))

break # for를 탈출

fig = plt.figure()

ax = list()

for i in range(20):

ax.append(fig.add_subplot(4,5,i+1))

for x_data, t_data in train_generator:

for idx, img_data in enumerate(x_data):

ax[idx].imshow(img_data)

break

plt.tight_layout()

plt.show()

학습

## ImageDataGenerator를 이용한 Dogs vs. Cats CNN 구현

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense, Conv2D, MaxPooling2D, Dropout

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import os

import matplotlib.pyplot as plt

# ImageDataGenerator부터 생성해야 해요!

train_dir = 'C:/python_ML/data/cat_dog_full/train'

valid_dir = 'C:/python_ML/data/cat_dog_full/validation'

# ImageDataGenerator를 생성해 보아요!

# 특정 폴더에서 이미지를 가져오는 역할을 해요!

# 이미지를 가져올 때 scaling해 줄 수 있어요!

train_datagen = ImageDataGenerator(rescale=1/255) # MinMaxScaling하는 효과를 얻을 수 있어요!

valid_datagen = ImageDataGenerator(rescale=1/255)

train_generator = train_datagen.flow_from_directory(

train_dir, # target directory

classes=['cats', 'dogs'], # cats, dogs directory 순서로 label(0, 1)을 설정

# 만약 classes 설정을 생략하면 폴더의 순서(오름차순)로 label을 결정

target_size=(150,150), # image resize

batch_size=20, # 한번의 20개의 이미지를 가져와요!

# label에 상관없이 가져와요!

class_mode='binary' # 이진분류를 위한 값 : binary

# 다중분류를 위한 값 : categorical

)

valid_generator = valid_datagen.flow_from_directory(

valid_dir,

classes=['cats', 'dogs'],

target_size=(150,150),

batch_size=20,

class_mode='binary')

# Data가 준비되었어요!

# CNN Model 구현

model = Sequential()

model.add(Conv2D(filters=32,

kernel_size=(3,3),

activation='relu',

padding='SAME',

input_shape=(150,150,3)))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(filters=64,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(filters=128,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(Conv2D(filters=128,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(filters=64,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(Conv2D(filters=32,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(MaxPooling2D(pool_size=(2,2)))

# FC Layer

# input layer의 역할

model.add(Flatten()) # 전체 데이터를 2차원으로 변형

# Dropout layer(overfitting을 피하기 위해 사용)

model.add(Dropout(rate=0.5))

# Hidden layer

model.add(Dense(units=256,

activation='relu'))

# output layout

model.add(Dense(units=1,

activation='sigmoid'))

# model summary

# print(model.summary())

# Optimizer 설정

model.compile(optimizer=Adam(learning_rate=1e-4),

loss='binary_crossentropy',

metrics=['accuracy'])

# Learning

# history = model.fit(train_x_data_norm.reshape(-1,80,80,1),

# train_t_data.reshape(-1,1),

# epochs=200,

# batch_size=100,

# verbose=1,

# validation_split=0.3)

history = model.fit(train_generator, # 한번에 20개씩 뽑아..그런데 14000개를 뽑아야 해요! 1epoch

steps_per_epoch=700, # 20 * 700 = 14000 # 에폭당 몇번할꺼냐

epochs=30,

verbose=1,

validation_data=valid_generator,

validation_steps=300) # 20 * x = 6000

# 고양이와 멍멍이 예제

# 기존에 CSV파일을 이용한 방식은 메모리 한계문제때문에 사용상에 한계가 존재해요!

# 또한 이미지 파일을 분석해서 CSV파일을 생성해야하는 overhead가 존재해요!

# 그런데도 CSV로 변환해서 학습과 evaluation을 시켜본 이유는 우리모델의 정확도를 어느정도 알아보기 위해서.

# 정확도가 75% 정도 나왔어요!

# 이런 문제를 해결하기 위해 ImageDataGenerator를 이용해 볼꺼예요!

# 전체 데이터를 대상으로 하려면 속도가 너무 느리기 때문에 Colab에서 구현해야 해요!

# 그런데 Colab에서 구현하려면 우리의 이미지 파일들이 구글 드라이브에 올라가 있어야 해요!

# 25000장의 이미지를 구글 드라이브에 업로드 해보면 알겠지만... 안올라가요

# kaggle API를 이용하면 가능!

# 그러면 어떻게 해야 하나요 => 유료로 사용가능한 구글 클라우드 플랫폼을 이용하면 되요!(스토리지 서비스)

# 혹은 AWS(아마존 웹 서비스)를 이용하면 되요! (프로젝트 기간에는 사용가능)

# 둘 다 우리한테 없어요!

# 그러면 어떻게 해야 하나요???

# 전체 데이터가 아니라 그 중 일부의 이미지 데이터(4000개)만 이용해서 구글 드라이브에 올리고

# 작성한 ImageDataGenerator와 CNN code를 이용해서 학습하고 evaluation을 해 보아요!

# 당연히 성능은 않좋겠죠..학습데이터가 너무 적어요! => 어떻게 해야 하나요~ 다음시간에!!

'ai > Deep Learning' 카테고리의 다른 글

| pretrained network, Transfer Learning (전이학습) (0) | 2021.10.05 |

|---|---|

| Data Augmentation (데이터 증식) (0) | 2021.10.01 |

| cat & dog tf2.0 ver (0) | 2021.09.30 |

| Dog & Cat img → csv (0) | 2021.09.30 |

| CNN mnist code (0) | 2021.09.29 |