2021. 9. 30. 21:09ㆍai/Deep Learning

## CSV 파일을 이용해서 Kaggle Dogs vs. Cats 전체 이미지 학습

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Flatten, Dense, Conv2D, MaxPooling2D, Dropout

from tensorflow.keras.optimizers import Adam, SGD, RMSprop

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

# Raw Data Loading

df = pd.read_csv('/content/drive/MyDrive/융복합 프로젝트형 AI 서비스 개발(2021.06)/09월/30일(목요일)/train.csv')

display(df.head(), df.shape) # (25000, 6401)

# 이미지 데이터, label 데이터 분리

t_data = df['label'].values # [0 0 0 ... 0 1 1]

x_data = df.drop('label', axis=1, inplace=False).values # 2차원 ndarray

# 이미지 확인

plt.imshow(x_data[0].reshape(80,80), cmap='gray')

plt.show()

# train, test 데이터 분리

train_x_data, test_x_data, train_t_data, test_t_data = \

train_test_split(x_data,

t_data,

test_size=0.3,

stratify=t_data,

random_state=0)

# 정규화(Normalization)

scaler = MinMaxScaler()

scaler.fit(train_x_data)

train_x_data_norm = scaler.transform(train_x_data)

test_x_data_norm = scaler.transform(test_x_data)

# CNN Model 구현

model = Sequential()

model.add(Conv2D(filters=32,

kernel_size=(3,3),

activation='relu',

padding='SAME',

input_shape=(80,80,1)))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(filters=64,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(filters=128,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(Conv2D(filters=128,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(filters=64,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(Conv2D(filters=32,

kernel_size=(3,3),

activation='relu',

padding='SAME'))

model.add(MaxPooling2D(pool_size=(2,2)))

# FC Layer

# input layer의 역할

model.add(Flatten()) # 전체 데이터를 2차원으로 변형

# Dropout layer(overfitting을 피하기 위해 사용)

model.add(Dropout(rate=0.5))

# Hidden layer

model.add(Dense(units=256,

activation='relu'))

# output layout

model.add(Dense(units=1,

activation='sigmoid'))

# model summary

# print(model.summary())

# Optimizer 설정

model.compile(optimizer=Adam(learning_rate=1e-4),

loss='binary_crossentropy',

metrics=['accuracy'])

# Learning

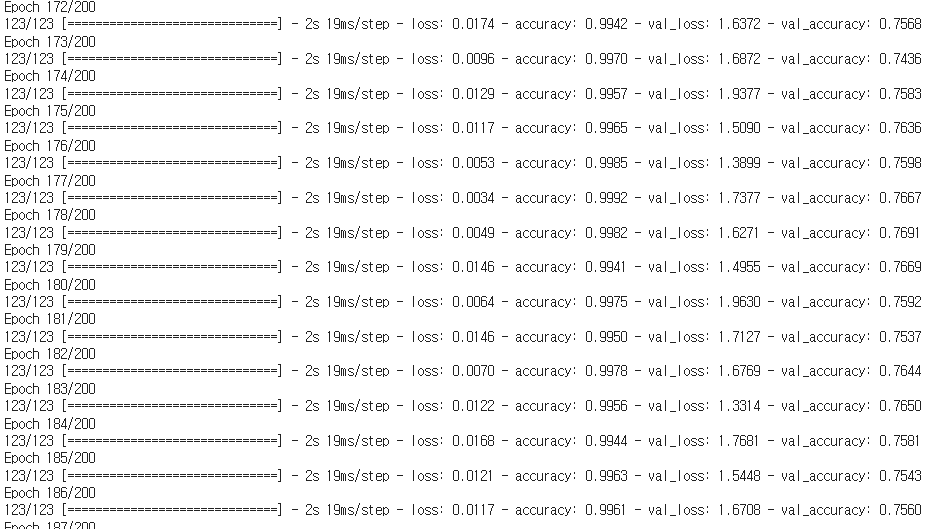

history = model.fit(train_x_data_norm.reshape(-1,80,80,1),

train_t_data.reshape(-1,1),

epochs=200,

batch_size=100,

verbose=1,

validation_split=0.3)

# Evaluation (test data를 이용해서)

result = model.evaluate(test_x_data_norm.reshape(-1,80,80,1),

test_t_data.reshape(-1,1))

print('우리 model의 accuracy : {}'.format(result))

# 우리 model의 accuracy : [1.8884376287460327, 0.765333354473114]

# train을 이용한 loss와 accuracy

# validation을 이용한 loss와 accuracy를 그래프로 비교해 보아요!

# print(history.history)

# print(history.history.keys()) # dict_keys(['loss', 'accuracy', 'val_loss', 'val_accuracy'])

train_acc = history.history['accuracy']

train_loss = history.history['loss']

valid_acc = history.history['val_accuracy']

valid_loss = history.history['val_loss']

# matplotlib을 이용해서 graph를 그려보아요!

# 왼쪽은 accuracy 비교그림, 오른쪽은 loss 비교그림

fig = plt.figure()

fig_1 = fig.add_subplot(1,2,1)

fig_2 = fig.add_subplot(1,2,2)

fig_1.plot(train_acc, color='b', label='training accuracy')

fig_1.plot(valid_acc, color='r', label='validation accuracy')

fig_1.legend()

fig_2.plot(train_loss, color='b', label='training loss')

fig_2.plot(valid_loss, color='r', label='validation loss')

fig_2.legend()

plt.tight_layout()

plt.show()

'ai > Deep Learning' 카테고리의 다른 글

| Data Augmentation (데이터 증식) (0) | 2021.10.01 |

|---|---|

| Image Data Generator (cat & dog ver) (1) | 2021.10.01 |

| Dog & Cat img → csv (0) | 2021.09.30 |

| CNN mnist code (0) | 2021.09.29 |

| CNN 전반적 내용 code (0) | 2021.09.29 |